The KQL Playbook (Play #3): Mastering Strings, Timestamps, and Ugly JSON

Alright, class. Welcome back to the KQL Playbook.

In our last session, we turned your where clause into a set of surgical tools. You learned the critical difference between == and =~, and you now understand when to use the has sniper rifle versus the contains shotgun, and no longer get stonewalled by "zero results" on queries you know should have worked. You’re finding the logs you’re looking for with precision. You are, without a doubt, levelling up.

But now you’ve run into another problem. Finding the right log is only half the battle.

You've been given a seemingly simple task: find every user in the company who is logging in with an outdated, vulnerable version of the Chrome browser. You feel a surge of confidence. You jump into the SigninLogs, filter for your users, and expand the log details to examine the UserAgent field, ready to pull your report. And you're greeted with this:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/140.0.0.0 Safari/537.36

What do you do with that? It's a mess. The critical piece of evidence, the browser version, is buried deep inside a standardised string. You want to be efficient so you can't just summarize by that whole field. And you certainly can't write a where clause to filter for a version number when it's glued to the word "Chrome" and surrounded by other text.

This is the reality of our job. Logs are often messy, nested, and structured in ways that are efficient for storage but infuriating for analysis. The raw data can be unrefined ore. To get to the gold, you have to be a data janitor, a digital archaeologist, and a text surgeon all at once.

Today’s play is about cleaning up the crime scene. I am going to teach you how to tame your messy data. You'll learn how to wrangle chaotic text strings, manipulate time to find attack patterns, and unpack the cryptic treasure chests of JSON data.

📚 The Complete KQL Playbook Series

You're reading part of The KQL Playbook, a comprehensive five-part journey from KQL beginner to threat-hunting professional. Whether you're just starting out or levelling up your detection skills, each play builds on the last.

The Five Plays:

Play #1: A Beginner's Guide to Talking to Your Data

The pipe (|), where, summarize, project, and sort by. The foundational moves that turn a blank query window into your first real conversations with data.

Play #2: Mastering the Matching Game

The difference between == and =~, when to use has vs. contains, and why one wrong character can blind your entire detection strategy.

Play #3: Taming Messy Dataparse, extract, split, bin, and mv-expand. Your toolbox for wrestling chaotic strings, timestamps, and JSON objects into submission.

Play #4: The Correlation Play: Joining Tables and Enriching Data

Master let, join, and the art of connecting disparate log sources to build complete narratives of attacker movement.

Play #5: The Anomaly Play: Finding the 'Weird'make_set, set_difference, and arg_max. Your weapons for hunting threats you don't already know about.

Jump to Your Level:

Beginner? Start with Play #1

Getting zero results? Play #2 has the answer

Drowning in messy data? Play #3 fixes that

Need to correlate events? Play #4

Ready to hunt anomalies? Play #5

Remember: Don't try to memorise syntax. Master the mindset. Every complex query is just these fundamentals chained together with pipes.

Wrangling Text: parse, extract, and split

Your most valuable intel is often buried inside a single, long string field. Command lines, URLs, user agent strings, and file paths are all goldmines, but you need the right tools to excavate the treasure.

Scenario: "Who is logging in with outdated browsers?"

Imagine your CISO walks over with a new directive: "I want a report of every user who has signed in using a version of Chrome older than 135. Outdated browsers are a huge security risk."

You jump into the SigninLogs table. The data is there, inside the UserAgent field. But it looks like this:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/140.0.0.0 Safari/537.36

How do you filter on the Chrome version? You can't just do | where UserAgent < "Chrome/135" because that's not how string comparison works. The critical piece of information, "Chrome/140.0.0.0", is buried in the middle of a long, standardized string. A rookie might export all the logs to a CSV and try to wrangle it in Excel. The professional carves it out directly in KQL.

The Play: Surgical Extraction with parse

The parse operator is your scalpel for well structured but messy text. You give it a template of what the string looks like, and it carves out the pieces you want into new columns.

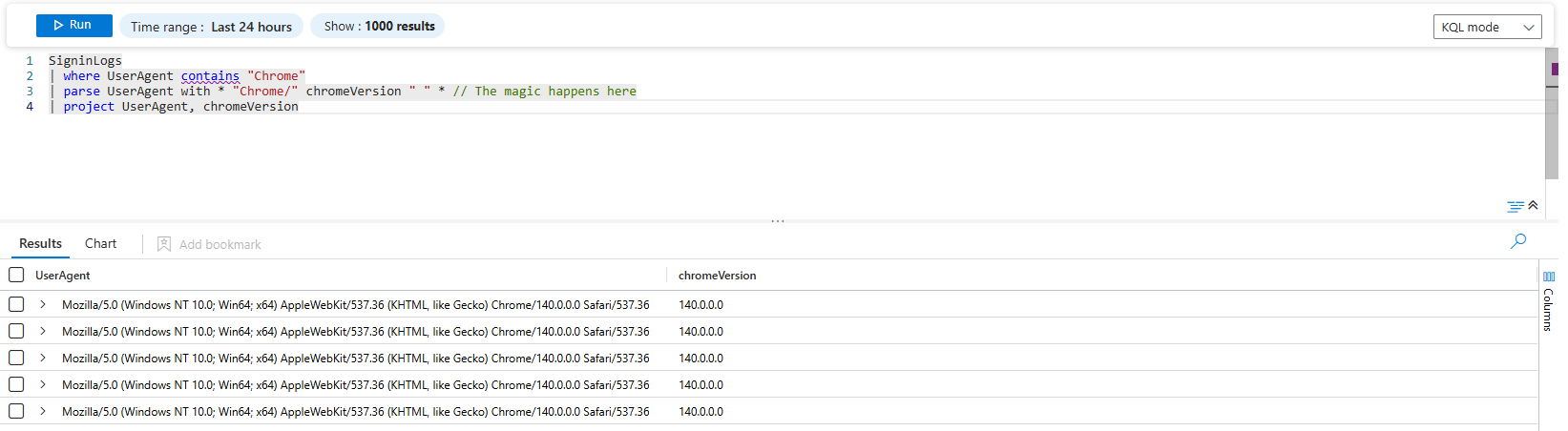

// Carving out the browser version

SigninLogs

| where UserAgent contains "Chrome"

| parse UserAgent with * "Chrome/" chromeVersion " " * // The magic happens here

| project UserAgent, chromeVersionLet's break down that parse statement in plain English:

parse UserAgent with: "Hey KQL, I want you to cut up theUserAgentfield."*: "First, find a chunk of text of any length and just throw it away." (This matchesMozilla/5.0 ... (KHTML, like Gecko))"Chrome/": "Next, find the literal string"Chrome/". This is our anchor point."chromeVersion: "After the anchor, grab everything up to the next space and put it into a new column calledchromeVersion."" " *: "Finally, match that space and throw away the rest of the string."

The result is a clean, workable column.

The Power Move: Getting Granular with split

Okay, so we have the version 140.0.0.0. But what if you only care about the major version number (the 140)? We need to split that string again. For this, the split() function is perfect.

Let's refine our query to get to the final answer for the CISO.

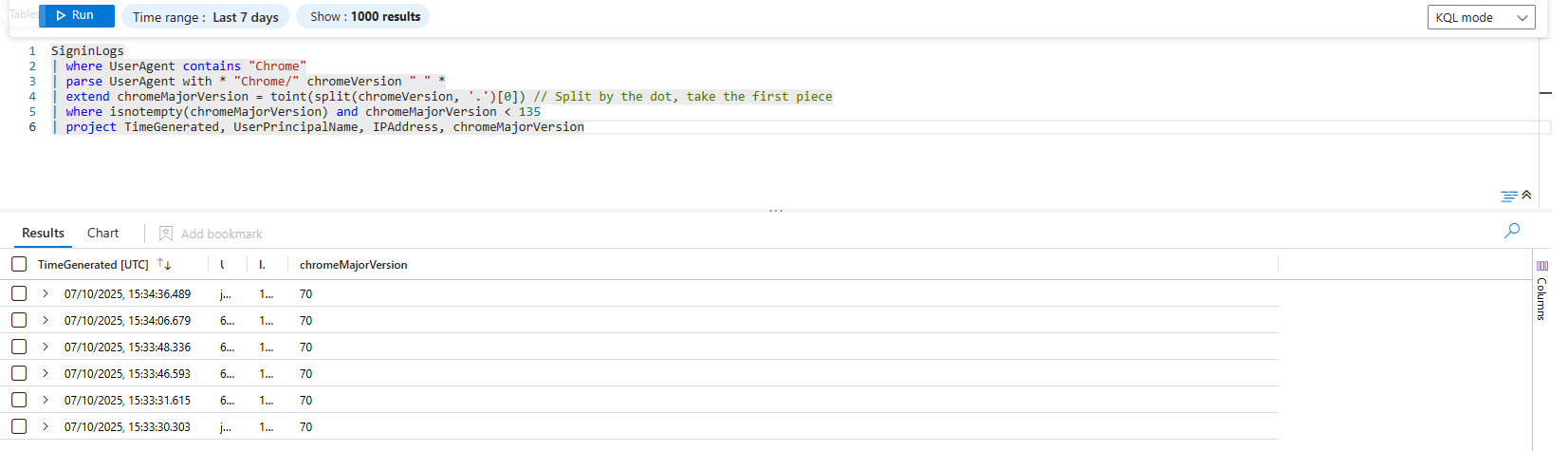

SigninLogs

| where UserAgent contains "Chrome"

| parse UserAgent with * "Chrome/" chromeVersion " " *

| extend chromeMajorVersion = toint(split(chromeVersion, '.')[0]) // Split by the dot, take the first piece

| where isnotempty(chromeMajorVersion) and chromeMajorVersion < 135

| project TimeGenerated, UserPrincipalName, IPAddress, chromeMajorVersionThis is a multi-stage cleanup operation:

- We

parseout the full version string:140.0.0.0 - We use

extendto create a new column,chromeMajorVersion. - Inside, the

split(chromeVersion, '.')function takes our version string and splits it into an array of pieces using the.as the separator:["140", "0", "0", "0"]. - We use

[0]to grab the very first piece of that array:"140". - We wrap it all in

toint()to convert the text"140"into the number140, so we can do a proper numerical comparison (< 135).

You just went from a messy, unusable string to a precise, actionable answer. You can now give the CISO a list of specific users who need to update their browsers, all without ever leaving the Sentinel query window. This is the essence of being a data janitor.

Mastering Time: bin, ago, and between

Time is the single most important piece of context in any investigation. Was that failed login a single typo or part of a 10-minute barrage? Did those two suspicious processes run seconds apart or hours apart? To see these patterns, you need to group your data into time buckets.

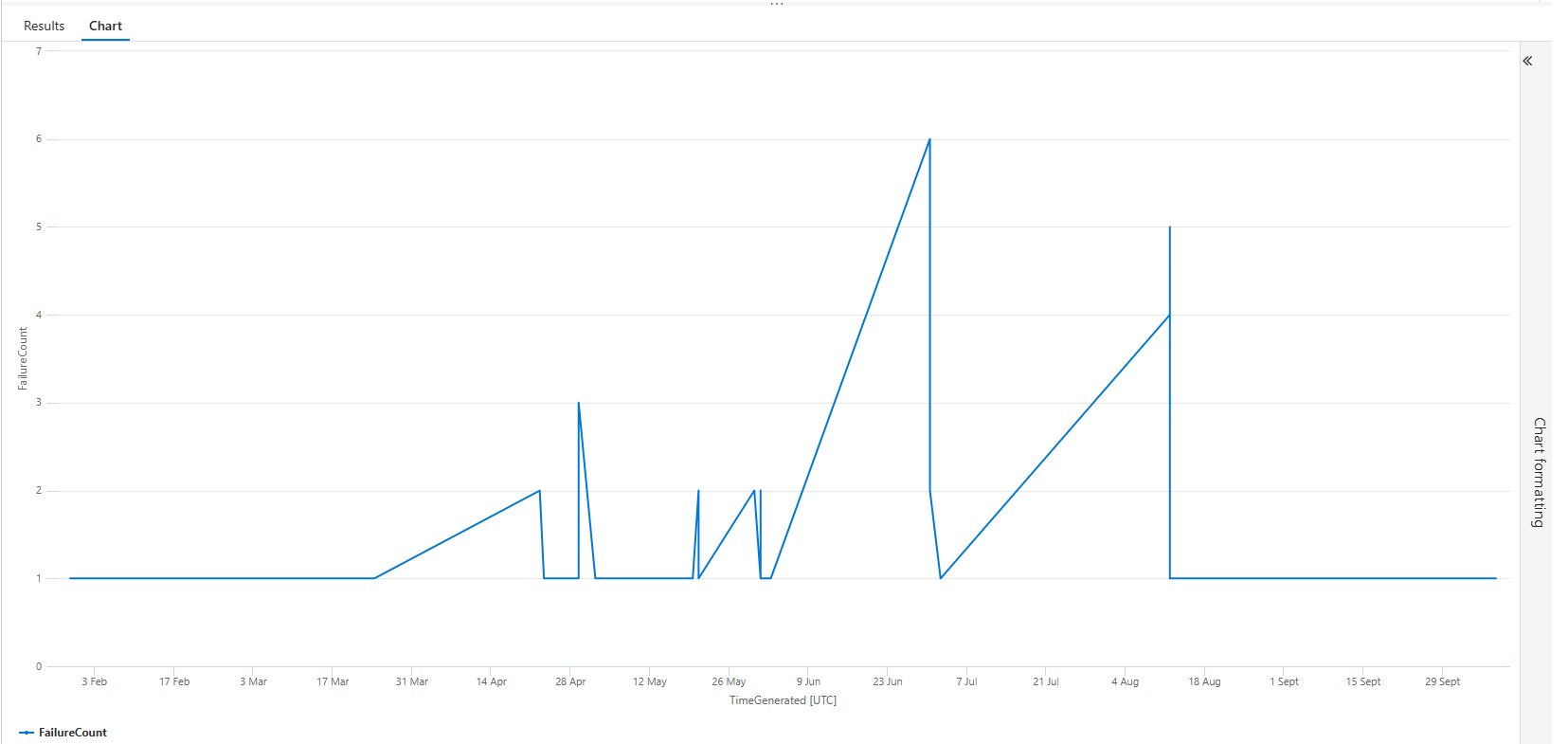

Scenario: "Visualising a Password Spray Attack."

You get an alert for "Multiple failed logins from a single IP." You run a query and see thousands of failures against hundreds of accounts. It's clearly a password spray. Now you need to report to management: when did it start, how long did it last, and what was its peak intensity? A giant table of failed logins won't answer that question effectively. A picture will.

The Play: Finding the Pattern with bin and render timechart

The bin() function is your time-bucketing machine. It takes a timestamp and "rounds it down" to the nearest interval you specify (minutes, hours, days). This allows you to group events that happened "at roughly the same time" together.

SigninLogs

| where TimeGenerated > ago(1d)

| where ResultType == 50126 // Error code for invalid username/password

| summarize FailureCount = count() by bin(TimeGenerated, 1m) // Group failures into 1-minute buckets

| render timechartLet’s walk through this:

- We filter our

SigninLogsfor failed logins in the last day. summarize FailureCount = count() by bin(TimeGenerated, 1m)is the core of the play. Instead of summarizing byUserPrincipalNameorIPAddress, we are summarizing by a time interval. This creates a simple table with two columns: a timestamp (for each one-minute interval) and the number of failures that occurred within that minute.| render timechart: This is the magic. This single command takes that summarized table and turns it into a beautiful graph.

Instantly, you have a visual timeline of the attack. You can see the exact minute the spray started, the moment it peaked with hundreds of attempts per minute, and the moment it stopped. This isn't just data; it's a story.

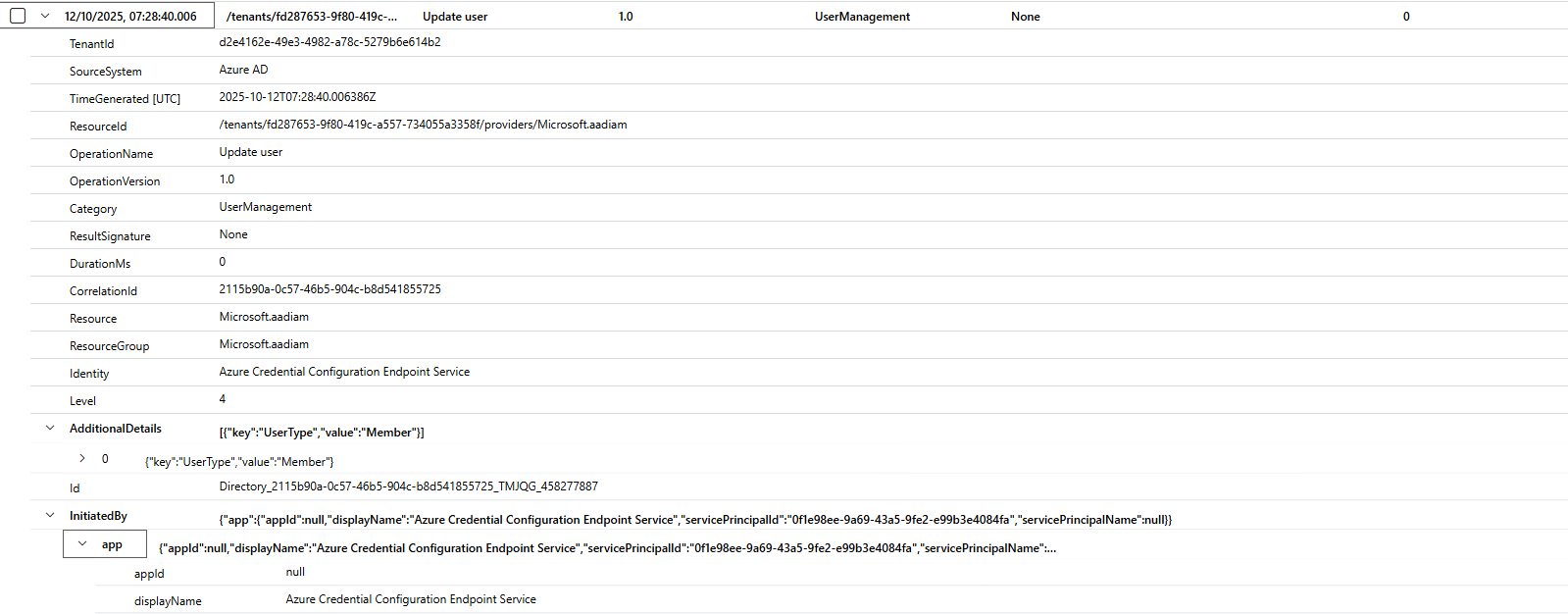

Unpacking JSON: Dot Notation and mv-expand

Welcome to the world of modern logs. Gone are the days of neat, simple columns where one field equals one piece of information. Today, your most critical data is often stuffed inside a single field, formatted as a complex, multi-layered JSON object. The AuditLogs table is the prime example of this. Looking at the raw data is like trying to read the Matrix.

Scenario: "What user accounts were recently updated, and who (or what) updated them?"

This is a fundamental audit question. The log for this "Update user" event exists, but the user being changed (the "target") and the user or application performing the change (the "actor") are buried inside complex JSON fields named TargetResources and InitiatedBy.

A raw TargetResources field for a user update looks something like this:

How do you get important info out of that mess?

The Play: Accessing Data with Dot Notation and extend

For structured JSON objects and arrays, you can use dot notation to navigate through the layers, just like a file path. We use the extend operator to create new, clean columns from this valuable, but hidden, data.

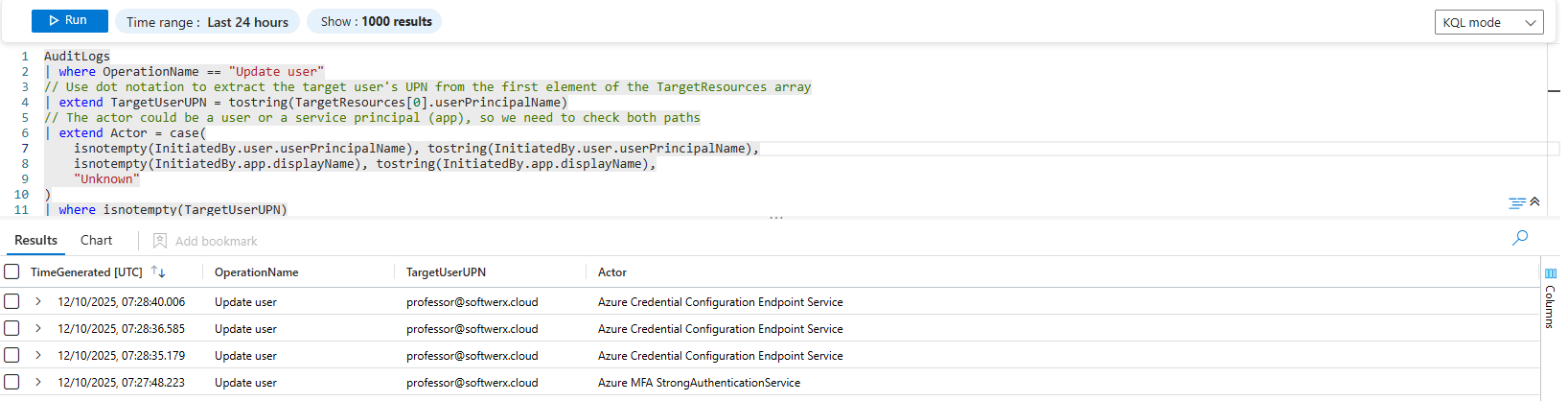

AuditLogs

| where OperationName == "Update user"

// Use dot notation to extract the target user's UPN from the first element of the TargetResources array

| extend TargetUserUPN = tostring(TargetResources[0].userPrincipalName)

// The actor could be a user or a service principal (app), so we need to check both paths

| extend Actor = case(

isnotempty(InitiatedBy.user.userPrincipalName), tostring(InitiatedBy.user.userPrincipalName),

isnotempty(InitiatedBy.app.displayName), tostring(InitiatedBy.app.displayName),

"Unknown"

)

| where isnotempty(TargetUserUPN)

| project TimeGenerated, OperationName, TargetUserUPN, ActorLet's dissect this archaeological dig:

TargetResources[0].userPrincipalName: This tells KQL, "Go into theTargetResourcesfield. It's an array, so go to the first item (arrays start at index0). Inside that item, grab the value of theuserPrincipalNamekey."case(...): This is a neat way to handle logs with variable structures. We check if the actor was a user (InitiatedBy.user...). If that path is empty, we then check if it was an app (InitiatedBy.app...). This makes our query robust.tostring(): We wrap everything intostring()because KQL sees the JSON values as a "dynamic" type. It's best practice to convert them to a specific data type like a string for stable, predictable queries.

The Power Move: Unpacking Changes with mv-expand

The query above is great for knowing who was changed. But what if you need to know what was changed? Was their phone number updated, or were they promoted to a Member user type? That critical detail is buried even deeper, in a nested array called modifiedProperties.

This is where dot notation isn't enough. For this, you need the master key to JSON: mv-expand.

mv-expand is a miracle worker. It takes a single row that has an array in one of its columns and creates a copy of that row for each item in the array. It "unpacks" the list into separate, clean rows, turning one event into many, more detailed events.

Scenario: "Show me the specific attributes that were changed on user accounts."

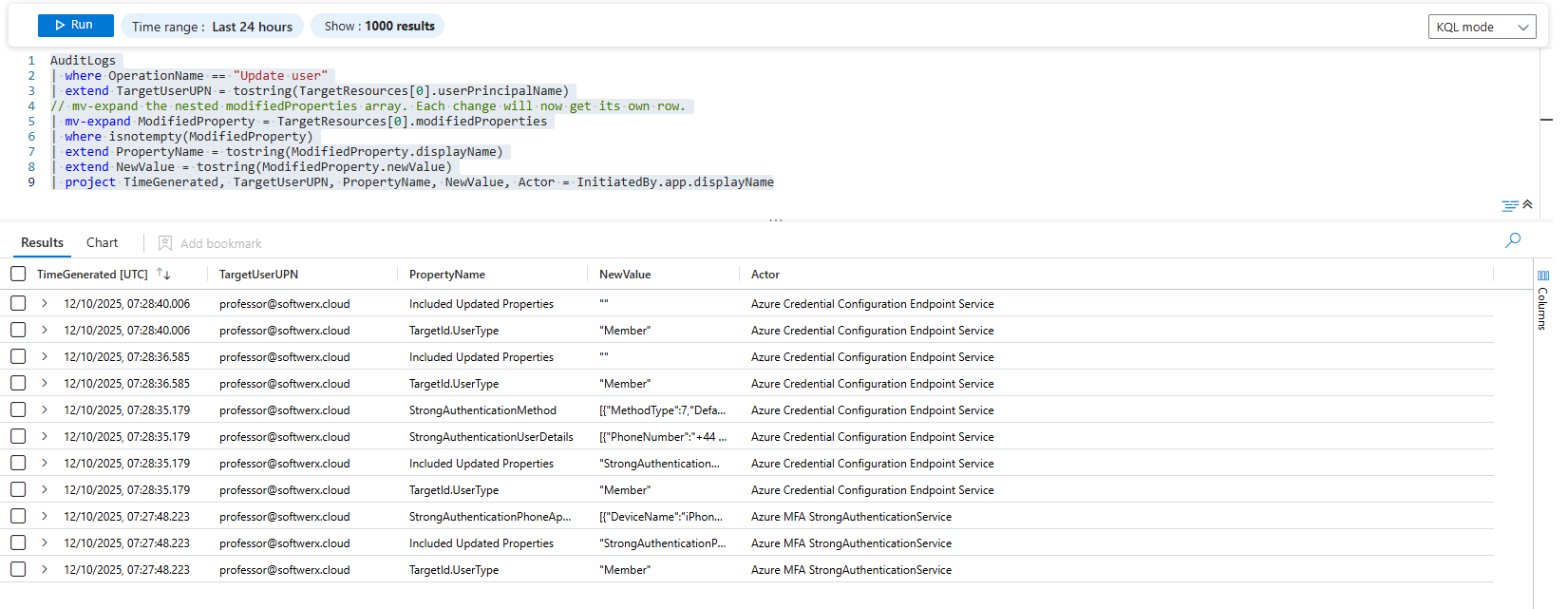

AuditLogs

| where OperationName == "Update user"

| extend TargetUserUPN = tostring(TargetResources[0].userPrincipalName)

// mv-expand the nested modifiedProperties array. Each change will now get its own row.

| mv-expand ModifiedProperty = TargetResources[0].modifiedProperties

| where isnotempty(ModifiedProperty)

| extend PropertyName = tostring(ModifiedProperty.displayName)

| extend NewValue = tostring(ModifiedProperty.newValue)

| project TimeGenerated, TargetUserUPN, PropertyName, NewValue, Actor = InitiatedBy.app.displayNameThis is what makes you a pro-level analyst. Let's break it down:

- We start the same way, by pulling out the

TargetUserUPN. mv-expand ModifiedProperty = TargetResources[0].modifiedProperties: This is the core of the play. It says, "Look inside theTargetResourcesarray, at themodifiedPropertiesnested array. For each item in that list, create a brand new row, and put the item's content into a temporary column calledModifiedProperty."- Now, instead of one row, we might have two, three, or more!

extend PropertyName = tostring(ModifiedProperty.displayName): Since each new row'sModifiedPropertycolumn contains a single JSON object (like{"displayName":"TargetId.UserType", ...}), we can now easily use dot notation to pull out thedisplayNameandnewValue.

A single "Update user" event that changed two properties, which looked like one messy row in the logs, now looks like this in your results:

You've successfully transformed one cryptic log event into a clean, human-readable audit trail showing exactly what changed. That is the power of taming ugly JSON.

Class dismissed.